LinkedIn knows it has a problem with bots and fake accounts, and has acknowledged this on more than one occasion. For years, it has been aware of spam, fake job offers, phishing, fraudulent investments, and (at times) malware, and has been trying to combat those issues.

In 2018, LinkedIn rolled out a way to automatically detect fake accounts. It also gave users an inside look into what’s going on behind the scenes: A dedicated team constantly analyzing abusive behavior, risk signals, and patterns of abuse; tools that are continuously improving; and the company investing in AI technologies aimed at detecting communities of fake accounts.

Now, LinkedIn is rolling out new security features to support its cause further. As Oscar Rodriguez said in his post on the LinkedIn blog:

“I am eager to share that as part of our ongoing commitment to keeping LinkedIn a trusted professional community, we are rolling out new features and systems to help you make more informed decisions about members that you are interacting with and enhancing our automated systems that keep inauthentic profiles and activity off our platform. Whether you are deciding to accept an invitation, learning more about a business opportunity, or exchanging contact information, we want you to be empowered to make decisions having more signals about the authenticity of accounts.”

What’s new?

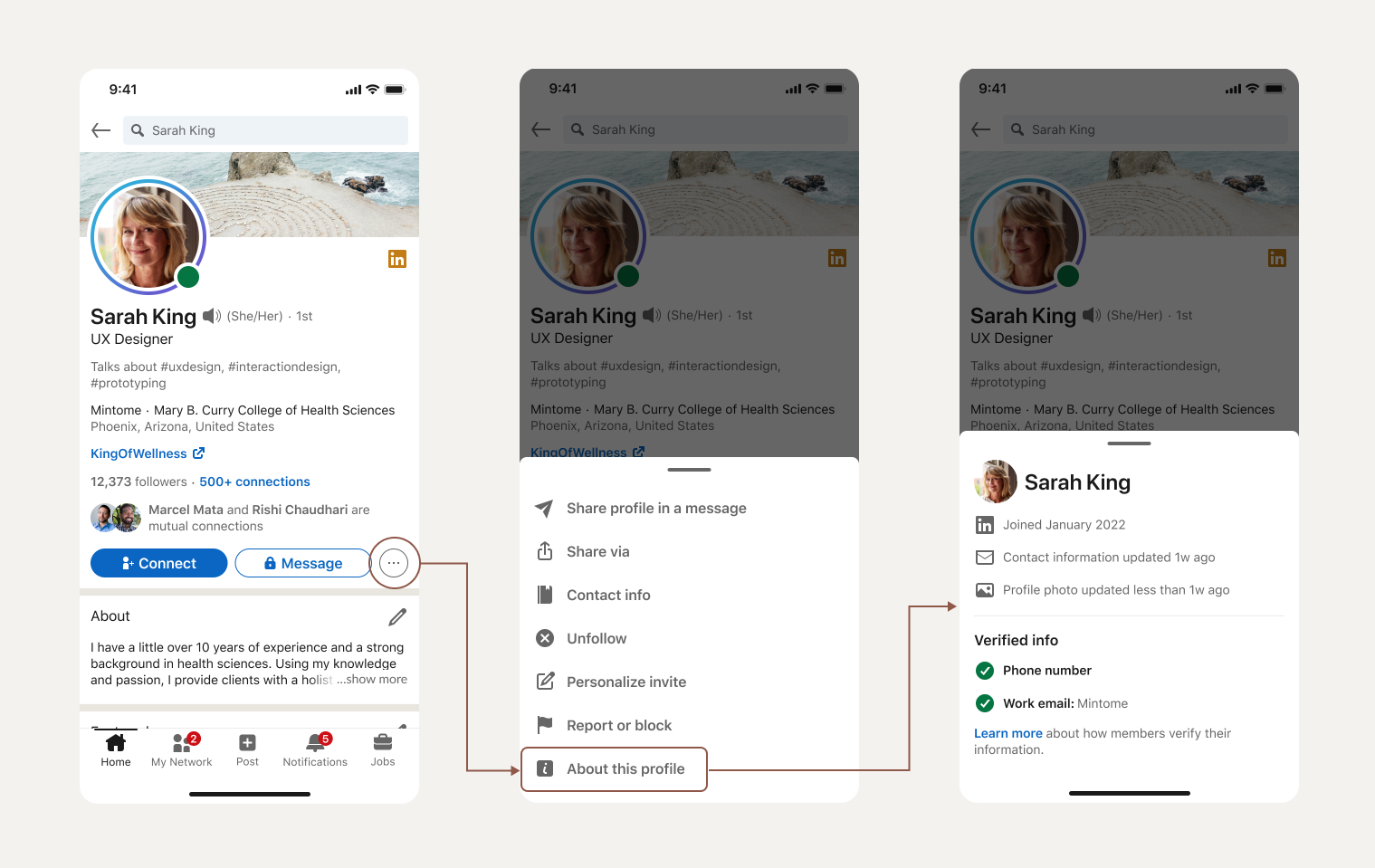

The “About this profile” feature. This new section in a LinkedIn profile will contain information about when the profile was first created, when it was updated last, and indications of whether the account is associated with a verified phone number or work email address. This feature has already been rolled out.

Tech that analyzes profile pictures. As AI-based synthetic image generation technology—often called deepfake—has grown in sophistication, tech has become indispensable in helping us filter genuine profile photos from AI creations. LinkedIn’s deep-learning tech looks for subtle image artifacts, which may be invisible to the naked eye, associated with images created using AI. Accounts with positive detections will be removed before they can be used to reach out to members.

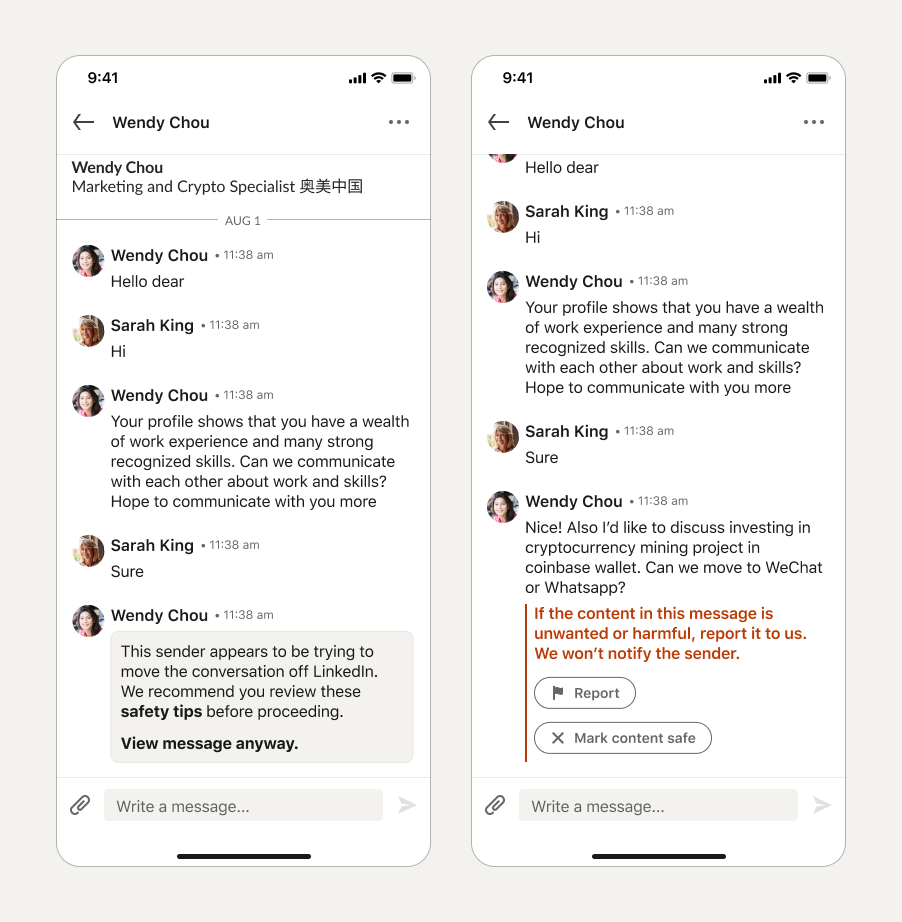

Flags that alert users of suspicious behavior. One known tactic of those with ill intent is encouraging their potential victim to continue their conversation away from the social platform they first met in favor of another communication medium, usually via email or IM. Scammers and fraudsters have employed this same tactic on LinkedIn. The platform now warns potential targets when the person they’re talking to suggests they move elsewhere.

“This sender appears to be trying to move the conversation off LinkedIn. We recommend you review these safety tips before proceeding.” reads the warning. Clicking “View message anyway” displays the sender’s message, which LinkedIn initially blocks unless the receiver wants to view it.

Stronger together

While we see the tools grow that keep users safe and give them confidence in their decision-making regarding online safety, the community’s involvement remains a powerful and effective deterrence against cybercriminals. LinkedIn encourages its users to be wary and report anything strange they see within the platform, such as:

- People asking for money (in the form of cryptocurrency or gift cards) so you can claim a prize or other winnings

- People expressing their romantic interest in you (this is generally frowned upon and is considered highly inappropriate on the platform)

- A job posting that sounds too good to be true

- A job posting that asks for an upfront fee for anything.

Keep in mind these red flags, too:

- Profiles with abnormal profile images

- Profiles with inconsistencies in their work history and education

- Profiles with bad grammar. Question the credibility and legitimacy of such profiles

- New profiles with no common connections, generic names, or few connections

Stay safe!

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.